On Friday, October 25th Google announced the release of the BERT algorithm. BERT (Bidirectional Encoder Representation from Transformers) is a bidirectional natural language processing pre-training.

In order to understand just exactly what BERT does, we first need to break down what “bidirectional natural language process pre-training” means. A “natural language processing (NLP)” is a type of artificial intelligence that was created to help fill the gap between how humans communicate and what computers understand. A “pre-training representation” can either be context-free or contextual. A context-free model (which was previously used) generates a single word meaning without context. Google provided the following example for a context-free-model:

the word “bank” would have the same context-free representation in “bank account” and “bank of the river.

Whereas a contextual model, which can be unidirectional or bidirectional, generates a representation of each word that is based on the other words in the sentence for context.

In the sentence “I accessed the bank account,” a unidirectional contextual model would represent “bank” based on “I accessed the” but not “account.” However, BERT represents “bank” using both its previous and next context — “I accessed the … account” — starting from the very bottom of a deep neural network, making it deeply bidirectional.

Essentially, with BERT, Google can interpret the appropriate meaning of a word by looking at the surrounding words, rather than processing words one-by-one in order. In addition, Search will be able to understand queries and content that are more natural language- and conversation-based.

It is important to note that BERT does not replace RankBrain, which was the first artificial intelligence method used by Google for understanding queries. BERT is merely an additional method for understanding context and queries.

Examples

Below are examples provided by Google that show BERT’s ability to better understand the intent behind a user’s search:

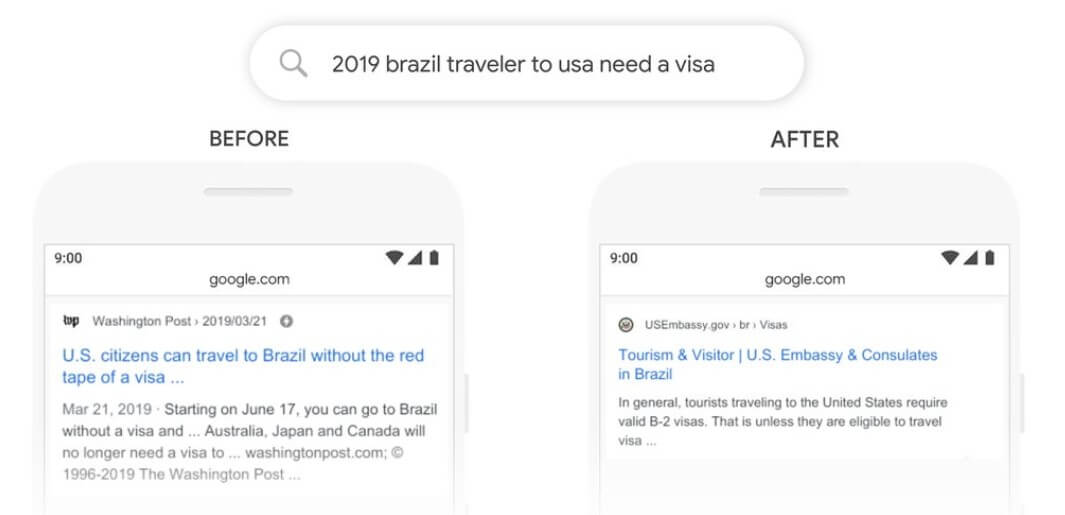

Search Example:

Here’s a search for “2019 brazil traveler to usa need a visa.” The word “to” and its relationship to the other words in the query are particularly important to understanding the meaning. It’s about a Brazilian traveling to the U.S., and not the other way around. Previously, our algorithms wouldn’t understand the importance of this connection, and we returned results about U.S. citizens traveling to Brazil. With BERT, Search is able to grasp this nuance and know that the very common word “to” actually matters a lot here, and we can provide a much more relevant result for this query.

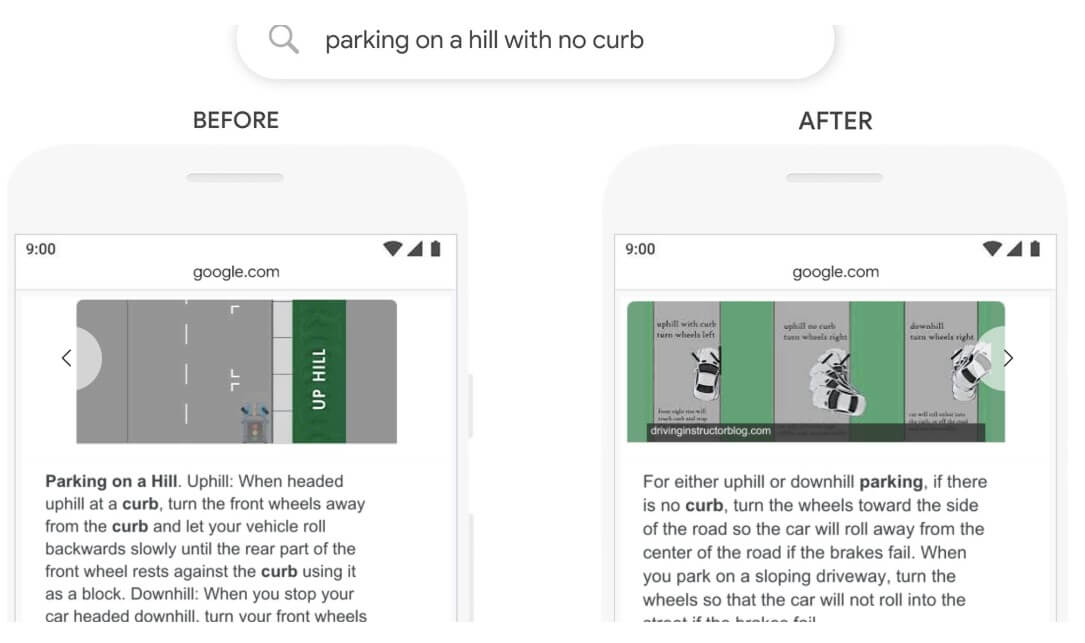

Rich Snippet Example:

In the past a query like this would confuse our systems – we placed too much importance on the word “curb” and ignored the word “no”, not understanding how critical the word was to appropriately respond to this query. So we’d return results for parking on a hill with a curb.

Implications for Brands

Google announced that BERT will impact about 10% of all English-language queries (with plans to expand to other languages in the future). This update, however, is already available globally for featured snippets. So how will BERT impact SEO and rank? Brands are unlikely to see a substantial impact from the update. Why? As previously mentioned, BERT allows search engines to understand longer, more conversational queries, which in the SEO world means long-tailed queries. Brands, however, are not likely tracking these long-tailed queries, as they tend to track short-tailed queries that send high volumes of traffic to the brand’s website. If a brand’s SEO strategy isn’t targeting long-tailed queries, the brand is less likely to see BERT’s impact. In the next few weeks and months, site owners may begin to see more long-term queries appearing in search results for their particular brand. These changes are likely to appear in Google Search Console and Brightedge’s Data Cube. As a result, brands should consider integrating long-tailed keywords into their keyword strategy as the future of search appears to be heading towards more natural, organic content.

How Performics Can Help?

While other agencies focused on purely technical SEO considerations may be at a loss on how to optimize content towards the BERT algorithm, Performics’ content marketing model is predicated on this sort of inevitable technological advancement. Performics offers an end-to-end performance-driven content solution for brands, focused on Intent-Based content creation. Performics created a proprietary research unit, the Intent Lab, in partnership with Northwestern University to uncover intent and apply it to our clients’ content optimization strategies. The Intent Scoring Algorithm enables brands to classify keywords by intent and align messaging with consumers’ stage in their journey. This process allows Performics to create unique, relevant and meaningful copy for every stage in the consumer journey.

We intentionally hire copywriters who come from a number of different backgrounds, not just digital. Their skill sets range from journalism to creative writing, from stage and film scripting to academic research. That’s because we not only pride ourselves in having a copywriting team that is well-versed in SEO, but we also want them to write primarily for the audience’s benefit―to reach the person behind the click. They are writing copy in a way that is both SEO optimized and intent-focused.

With the implementation of the BERT update, search is one step closer to understanding naturalistic, human communication. When it comes to performance content, Performics is positioned to not only make the most of the current Search landscape for your brand, but also deliver top-tier content that will continue to perform strongly as Search technology becomes more and more advanced. Performics recommends that brands assess their overall content strategy, particularly ensuring copy is focused not only on integrating more long-tailed keywords but also on conversational content that provides unique value to users.