Google – both the search engine and the organization – is widely considered to be omniscient. And why wouldn’t it be? It knows everything, has all the answers, and is the closest, most tangible thing we have to an all-knowing, God-like figure in our lives. This is why when this all-knowing figure demonstrates examples of bias, most of us are willing to try and explain it away.

So what if the Image search result for “CEO” only brings up images of white men? So what if Google claims that four US presidents were part of the KKK? If Google is saying so, it must be correct, right? Won’t Google know best? This is where most of us are wrong.

Google has a major bias problem.

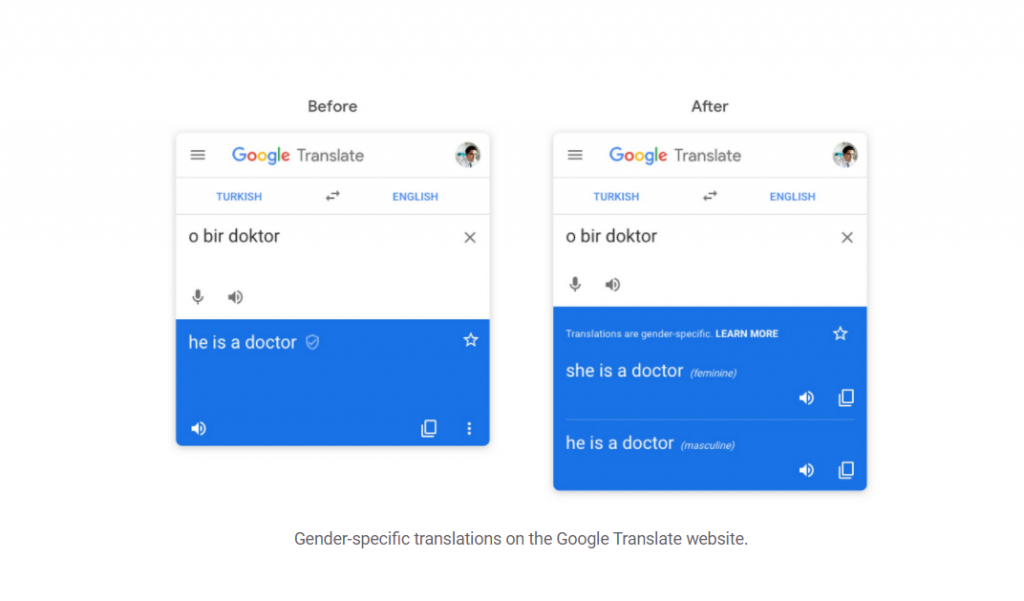

There are two kinds of bias that Google results in the exhibit. The first is technical, and it leads to results such as the infamous 2015 instance where Google Photos was found to identify black people as gorillas. Putting gender-neutral sentences through Google Translate has it assigning male pronouns to engineers and female ones to nurses. Though they have since apologized and corrected the problem, these weren’t the first time that Google exhibited racial and gender bias in its search results, and it certainly won’t be the last. Google’s AI and machine learning technology is trained by reading massive amounts of content across the internet, and it picks up people’s racist, misogynist, and xenophobic opinions along the way.

Source: Screenshot from the Google Blog

The second kind of bias that Google exhibits stems directly from the search engine’s perceived omniscience, something Danny Sullivan from SearchEngineLand calls its “One True Answer” problem. When a user searches for something on Google, more often than not, they won’t have to scour through the search results themselves to find the answer they’re looking for. Instead, the answer will be presented directly to the right at the top of the page in the answer box.

Greater emphasis on user comfort and convenience over the last several years has led to Google simplifying the user experience by presenting featured snippets of information that directly answer a user’s question. This information is often what the AI deems to be the most relevant answer from the first page of Google results. It’s very easy to think that this featured snipped is the “One True Answer” to your question. If Google, which has access to literally all the information in the world, claims something is the right answer, it must be true, right?

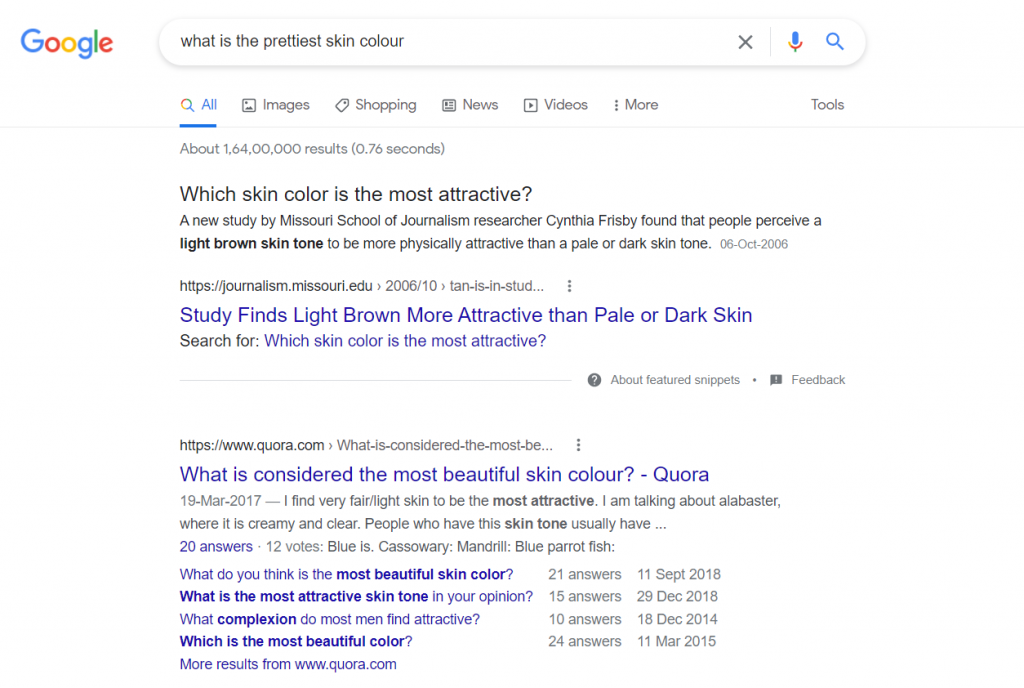

Not necessarily. Many times, Google’s featured snippets are just wrong. Since it can’t differentiate between the truth and fiction, it can make claims that Barack Obama is the king of the United States and give definitive answers to subjective questions such as ‘what is the prettiest skin color’ or ‘can money buy happiness.

Source: Screenshot from Google Search

Google’s bias problem has never been more relevant than now, and a greater focus on context might be the answer. At the September 2021 Search On event, the company revealed plans for several new features aimed at increasing context in the answers given by the search engine. This, in turn, will encourage users to ask more detailed questions as well. The simple list of ten relevant links has long been phased out in favor of more detailed knowledge panels, featured snippets of webpages, and answers to similar questions, but this latest update aims at going one step further.

Now, Google is going to allow users to understand more about the sources of the search results they see, provide more context to how much information is available about a particular topic, and utilize the Multimodal Unified Model (MUM) to make the entire search process more streamlined.

This constitutes combining different forms of search to get as detailed, relevant results as possible. For instance, if you want to buy socks with a certain pattern that’s on a shirt, you can combine text (“socks”) and image (“printed shirt”) searches to find the exact results that you’re looking for.

They’re also redesigning the entire search results page for maximum convenience. If you’re trying to learn about a topic, Google will automatically suggest “things to know” that will redirect you to helpful information that you may not have known to look for. Similarly, you’ll soon get options to further refine or broaden searches, allowing you to gain a never-seen-before level of insight without even leaving the page. If you want to shop, you can now do so directly from the search results page, with Google’s 24-billion-product strong Shopping Graph inventory allowing you to window shop, check the inventory of local stores, and read brand reviews all in one place.

How does the question of bias come into this? On the one hand, this update prevents complicated questions from throwing up black-or-white answers that don’t do them justice. Greater emphasis on context means that users are more likely to narrow down their searches to exactly what they’re looking for, and Google is more likely to provide contextual information that will help users make informed decisions. Instead of taking a singular – often wrong – stand, Google will now show users the different perspectives that exist on a subject. They’ve also started providing more information about the results they display, helping users make informed decisions about the sources they choose to believe.

On the other hand, greater convenience means that you’re more likely to be redirected to Google’s own products, spend more time on Google’s own pages, and learn information directly from Google instead of the third-party site that the information is from. Fewer clickthroughs means that independent creators, most of whom rely almost entirely on traffic from Google, aren’t allowed to reap the rewards of their hard work.

In response to this, Pandu Nayak, Vice President of Search, told The Verge that Google needs to continuously “build compelling experiences” for users to remain relevant in the increasingly cutthroat search engine landscape. As of now, Google sends an increasingly large number of users around the internet every year. Without such constant innovation, he claims they “will not be around to send traffic to the web” in the future at all.

While this answer sidesteps the question at hand entirely, Google’s growing popularity, and thus authority, gives way to another question: What is the viewpoint through which Google is speaking? With thousands and millions of perspectives given a voice through the internet, how does Google choose what is the most reliable and objective approach to a subject? The answer is simple: It doesn’t.

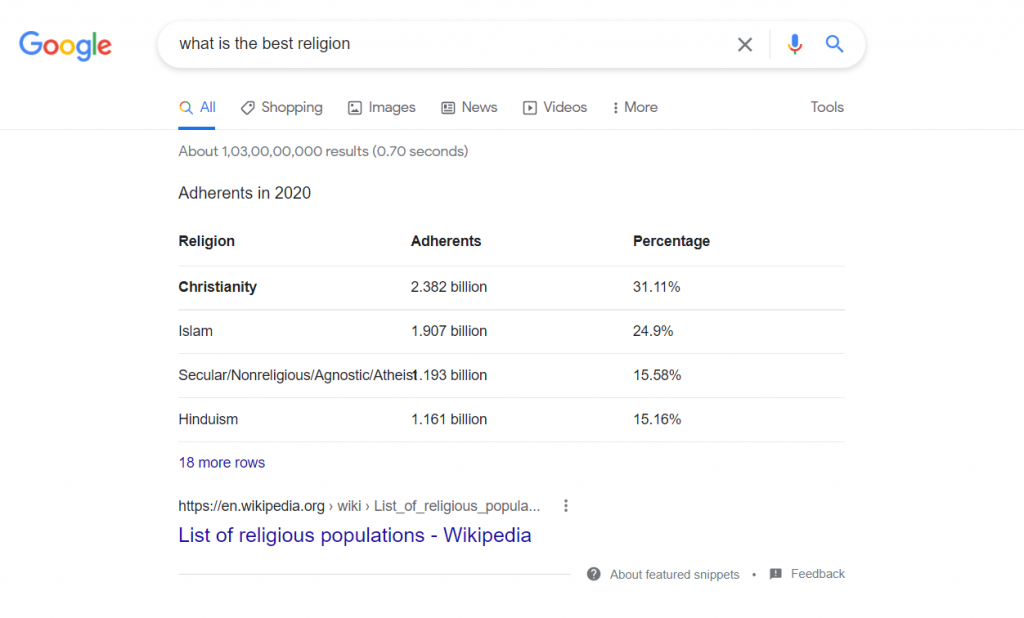

So far, Google executives have answered this question by focusing on the most objective aspects of bias, such as a website’s credibility and authoritativeness. As reported by The Verge, Google SVP Prabhakar Raghavan says that domains, where they could be accused of “excessive editorializing”, will be avoided entirely. For instance, the “things to know” boxes won’t appear for searches of a sensitive nature.

Source: Screenshot from Google

Here lies the conundrum: Isn’t this filtration itself a form of bias? As per a Google spokesperson, however, they aren’t “allowing or disallowing specific curated categories, but [their] systems are able to scalably understand topics for which these types of features should or should not trigger”.

Another step Google has taken to avoid bias in their search results is simply not launching products that may be susceptible to such errors. For instance, features involving large language models, such as complicated questions that include multiple factors, were demonstrated earlier this year but aren’t going to be available to the public quite yet. This is because all the solutions to bias in language models have been Band-Aid solutions at best, such as the removal of gendered pronouns entirely in Gmail’s Smart Compose feature. Such solutions are simply targeting the consequences – and not the root cause – of such biases. And for the near future, this is likely the best solution we can possibly get.

We see biases in AI and technology because, at the end of the day, there is bias in all content. Every webpage that Google’s crawlers go over are written from a certain point of view, simply because they’ve all been authored by humans with varying lived experiences, perspectives, and – you guessed it – biases. The solution isn’t to get rid of bias entirely since that would be impossible – not to mention would raise yet another question of whose perspective could be considered to be completely unbiased at all – but to ensure that there are a healthy mix of perspectives for the AI to learn from.

When there is an equal amount of information fed to an AI from various communities and languages, it’s less likely to accept only one as “valid”. For more divisive topics such as religion, politics, and conspiracy theories, the best approach is no approach – which Google is already doing. Google’s revamped search process has raised many questions about search engine bias, and though it doesn’t have all the answers quite yet, it’s taking steps in the right direction.

Credit: Tanvi Rao

Related Article: May Core Update 2022 – Performics Preliminary Report

Related Article: May Core Algorithm Update 2022 – Performics Impact Report

Related Article: Powering Performance in a Search Ecosystem Dominated by Brand KWs